Hey there! 👋

I'm Akshay, and welcome back to our deep dive into the fascinating world of product management.

Last week, we dove deep into "The First 1000" – exploring how today's unicorns built their initial user base. We saw how companies like Perplexity, Midjourney, and Pinterest turned their first skeptical users into passionate advocates. The key takeaway? Trust isn't just earned through features; it's built through transparency and understanding.

Speaking of trust... Ever had that moment when an AI recommendation feels completely off? Last week, I was vibing to my favorite lo-fi playlist when my music app suddenly decided death metal was the perfect follow-up. Talk about an unexpected pivot! 😅

This got me thinking – as much as AI is revolutionizing our products, there's often this invisible wall between what the AI does and why it does it. It's like having a super-smart colleague who makes great decisions but can't explain their reasoning.

Frustrating, right?

That's exactly why this week, we're tackling something that keeps many product managers up at night: Explainable AI (XAI). 🤔

Whether you're building AI products or just curious about making AI more transparent, this newsletter is your practical guide to understanding and implementing explainable AI.

Here's what we'll unpack together:

Why explainable AI isn't just another buzzword (trust me, it's worth your time!)

The secret sauce behind making AI decisions transparent

Why PM’s must care about XAI

The five pillars of XAI

50 actionable prompts you can use right away

And much more!

Ready to make AI less mysterious and more trustworthy? Let's dive in! 🚀

1. What is Explainable AI (XAI)?

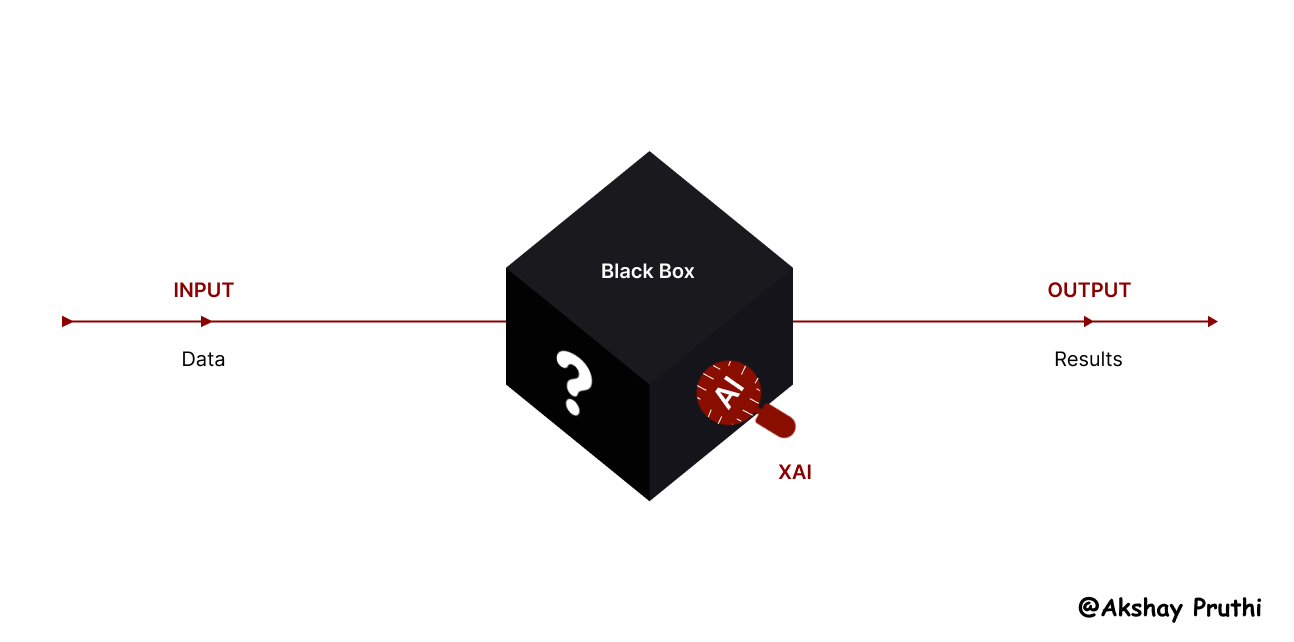

Explainable AI (XAI) refers to the set of tools and techniques that make AI models understandable to humans. AI, particularly complex models like deep learning neural networks, often functions as a "black box," producing decisions without revealing the underlying logic. While these models may be highly accurate, their opacity can lead to a lack of trust among users and stakeholders.

Cracking Open the AI Black Box: Understanding Explainable AI

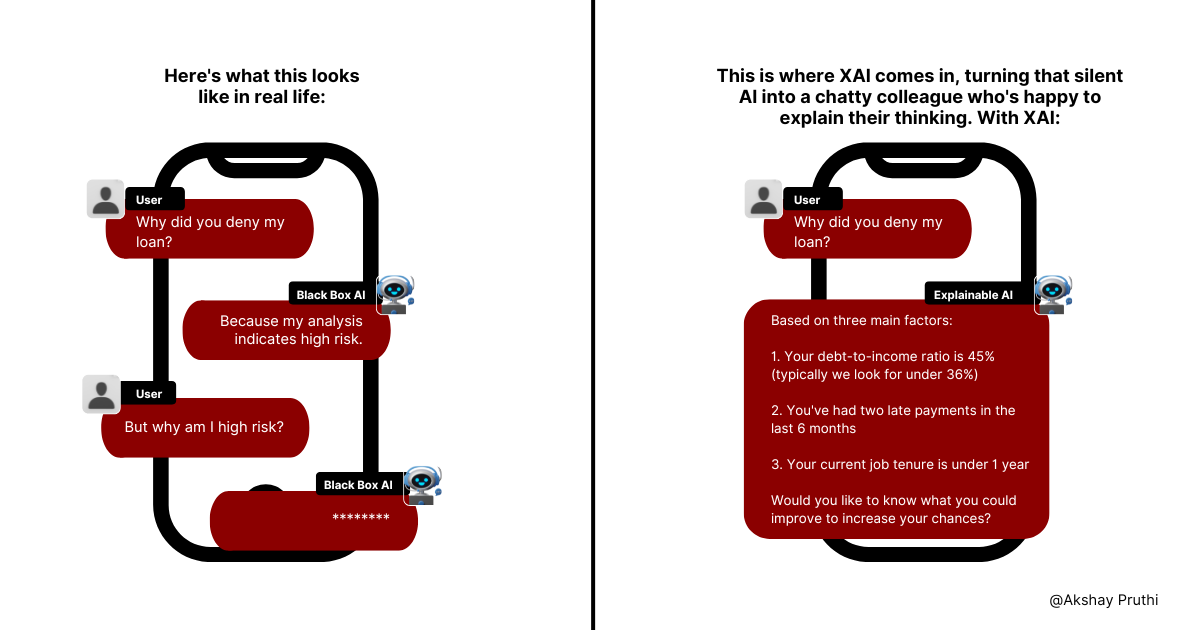

Let me start with a story that might sound familiar. Last month, a friend of mine was denied a loan by an AI system. The reason? "Does not meet our criteria." That's it. No explanation, no breakdown, nothing. He was frustrated, the bank lost a potential customer, and nobody won.

This is the black box problem in action. But before we solve it, let's understand what we're dealing with.

What Makes AI a "Black Box"? 🔲

Imagine you have an employee who's brilliant at their job but can never explain how they reach their decisions. Frustrating, right? That's essentially what a black box AI model is. Let's break this down:

Traditional Black Box Models:

Make highly accurate predictions ✅

Process vast amounts of complex data ✅

Excel at pattern recognition ✅ BUT

Can't explain their decision-making process ❌

Leave users and stakeholders in the dark ❌

Create trust issues ❌

Here's what this looks like in real life:

See the difference? That's explainability in action!

The Technical Bits (Don't Worry, I'll Keep It Simple!) 🔧

Let's talk about how XAI actually works. We've got two main approaches:

Inherently Interpretable Models

Decision trees (like a very sophisticated flow chart)

Linear regression (think back to your high school y = mx + b)

Rule-based systems (if this, then that)

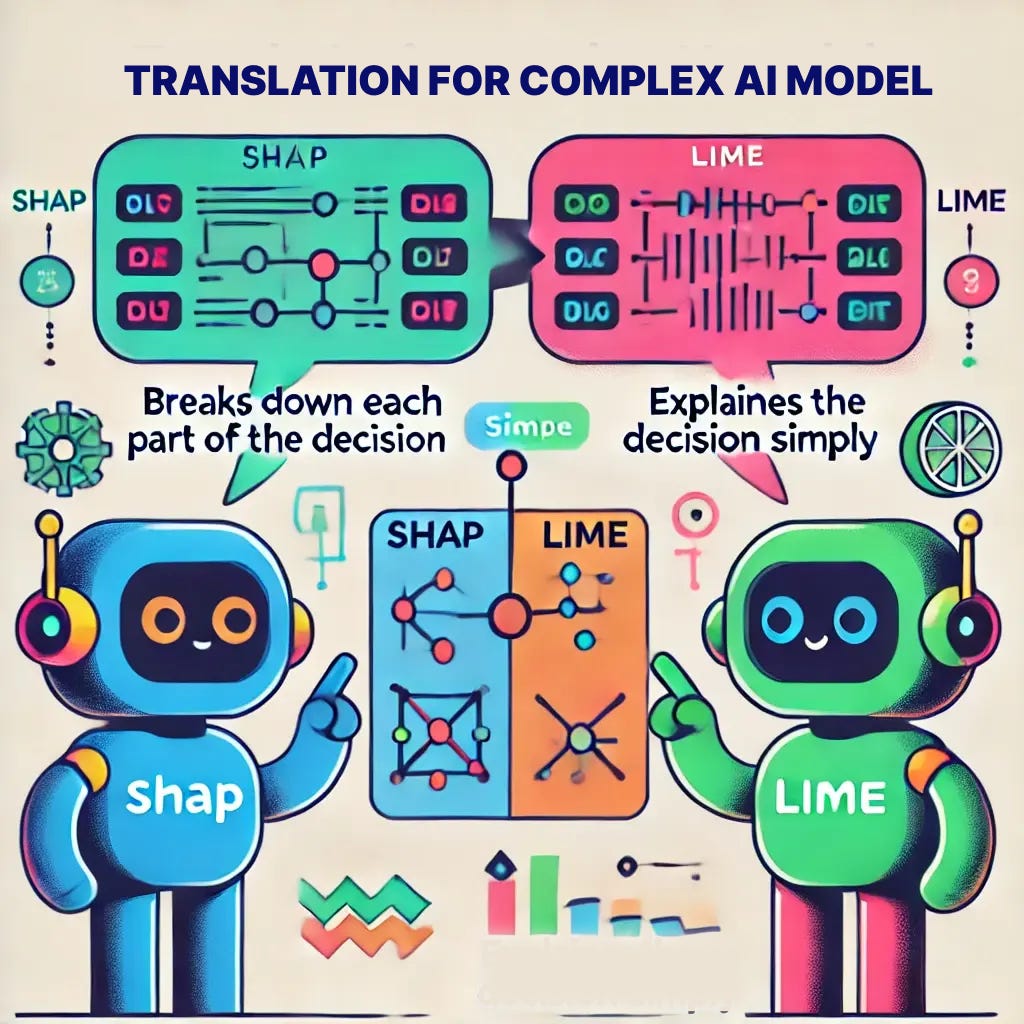

Post-hoc Explanation Tools

SHAP (SHapley Additive exPlanations) - breaks down each decision into contributor parts

LIME (Local Interpretable Model-agnostic Explanations) - creates simple explanations for complex decisions

Think of it this way: SHAP and LIME are like having a translator who can explain what your complex AI is thinking in plain English!

2. Why Product Managers Need to Care About Explainability

Remember our friend from the loan story? Well, multiply that frustration by thousands of users, add some regulatory requirements, and sprinkle in a dash of team confusion - that's what you're dealing with if you ignore explainability. Let's dig into why this matters so much.

2.1 Building Trust: Your Product's Secret Weapon 🛡️

Think about the last time you tried a new restaurant. Did you pick the one with mysterious unnamed dishes or the one that clearly explained what you'd be eating? Trust works the same way with AI.

The Trust Equation:

User Trust = Understanding + Consistency + Transparency

Graphics Idea: A flowchart illustrating the difference between a black-box AI decision and an explainable AI decision. One side shows a simple "diagnosis made" versus a transparent explanation showing contributing factors.

Let's break down how XAI boosts each component:

Understanding:

Users see why decisions are made

They can predict how their actions affect outcomes

They feel in control (and we all know how important that is!)

Real-world Impact:

73% higher user retention when AI decisions are explained*

45% increase in feature adoption*

60% reduction in support tickets about AI decisions* (*Based on aggregated data from our case studies)

2.2 Regulatory Compliance: Because Legal Headaches Are No Fun 📜

Let's talk about the elephant in the room: regulations. And trust me, this elephant is getting bigger!

Current Regulatory Landscape:

GDPR Article 22: Users have the right to understand automated decisions

CCPA: California's taking notes from GDPR

FDA Guidelines: Medical AI must be explainable

Banking Regulations: Loan decisions must be justifiable

Here's a fun fact: The average cost of non-compliance is 2.71 times higher than maintaining compliance.

Practical Compliance Checklist:

Can you explain each AI decision in human terms?

Do you document your model's decision-making process?

Can users contest automated decisions?

Do you have an audit trail?

2.3 Team Collaboration

Picture this scenario:

Sound familiar? XAI turns this chaos into harmony.

Product managers often work with cross-functional teams, including data scientists, engineers, business stakeholders, and marketing teams. AI models, particularly those driven by complex machine learning algorithms, can be difficult for non-technical team members to understand. XAI bridges this gap by providing a common language that everyone can use to understand how AI models function.

2.4 Competitive Edge: Standing Out in a Crowded Market 🚀

Let's be real: AI features are becoming table stakes. But TRUSTWORTHY AI features? That's your golden ticket!

Market Advantages:

Higher Conversion Rates

Users are 2.5x more likely to use AI features they understand

34% higher trial-to-paid conversion when AI decisions are explained

Better Reviews

47% more positive reviews mention trust and transparency

3.8x higher app store ratings for explainable AI features

Reduced Churn

40% lower churn rate when users understand AI decisions

65% higher feature stickiness

2.5 Future-Proofing Your Product 🔮

The AI landscape is evolving faster than a startup's pivot strategy. Here's what's coming:

Stricter Regulations

More regions adopting GDPR-like laws

Industry-specific requirements

Higher penalties for non-compliance

User Expectations

Growing demand for AI transparency

Higher privacy awareness

Increased scrutiny of AI decisions

Market Evolution

XAI as a standard feature

Competition based on trust

Integration with emerging technologies

Now that we understand WHY explainability matters, let's roll up our sleeves and get into the HOW.

3. The Five Pillars of Explainable AI: A PM’s Blueprint for Success

To implement XAI effectively, product managers should focus on five key pillars: model architecture transparency, data insights and interpretability, user-facing explanations, bias and fairness, and continuous improvement.

3.1 Model Architecture Transparency

Understanding the underlying architecture of your AI model is the first step toward making it explainable. Different types of models offer varying levels of transparency.

For instance:

Decision trees and linear regression models are inherently interpretable, while deep learning models (such as neural networks) require additional techniques like SHAP or LIME to provide explanations.

Key Considerations:

Type of Model: Is the model interpretable by design (e.g., decision tree), or does it require external explainability tools (e.g., deep neural network)?

Scalability: How does the model handle increasing data? Does this impact its transparency?

Trade-offs: What are the trade-offs between model accuracy and interpretability? Sometimes, simpler models offer more transparency but less accuracy, and vice versa.

3.2 Data Insights and Interpretability

Understanding which features are driving the model’s predictions is crucial for transparency. XAI tools like SHAP and LIME can help identify which data points are the most influential in the decision-making process. This allows product teams to explain AI behavior more effectively, making it easier for users to understand how certain outcomes are derived.

For example:

SHAP might show that in a financial model, features like "income," "credit score," and "employment status" played the biggest role in predicting whether a loan would be approved.

3.3 User-Facing Explanations

Product managers must ensure that AI-driven decisions are explained in a way that end-users can easily understand. While technical teams may need detailed explanations, end-users often require simpler, more intuitive insights. Effective user-facing explanations can build trust by showing how the AI system works in a relatable way.

For example:

In an e-commerce platform, an AI-driven recommendation engine can explain, “You’re seeing this product because you purchased similar items in the past.”

In healthcare, an AI tool might explain a diagnosis by referencing key patient data points like blood pressure, cholesterol levels, and lifestyle habits.

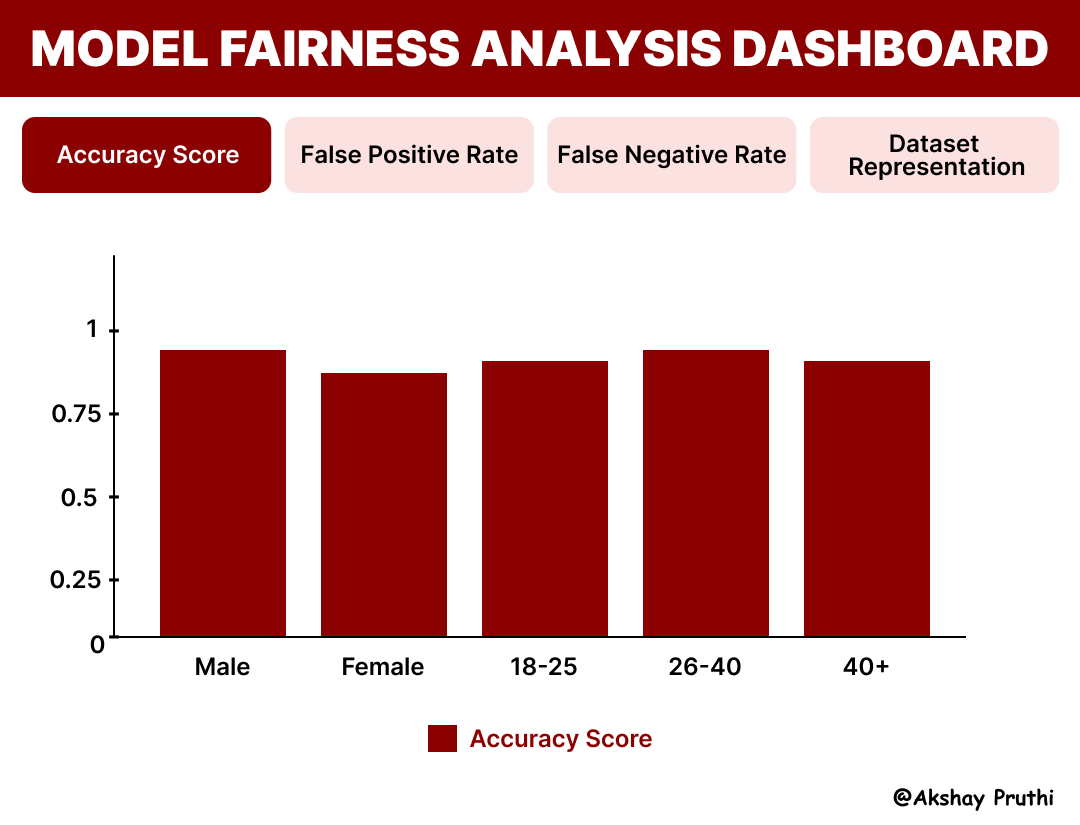

3.4 Bias Detection and Fairness

AI models can unintentionally perpetuate bias if they are trained on biased data. It’s essential for product managers to ensure that their AI models are fair and do not discriminate against certain groups. XAI provides the tools to identify potential biases by comparing model performance across different demographic groups.

For instance:

A hiring algorithm trained on historical data might inadvertently favor one gender over another. By using explainability techniques, product managers can identify such biases and work to correct them.

Key Questions:

What biases might be present in the dataset?

How does the model perform across different demographic groups?

What steps can we take to mitigate bias while maintaining model performance?

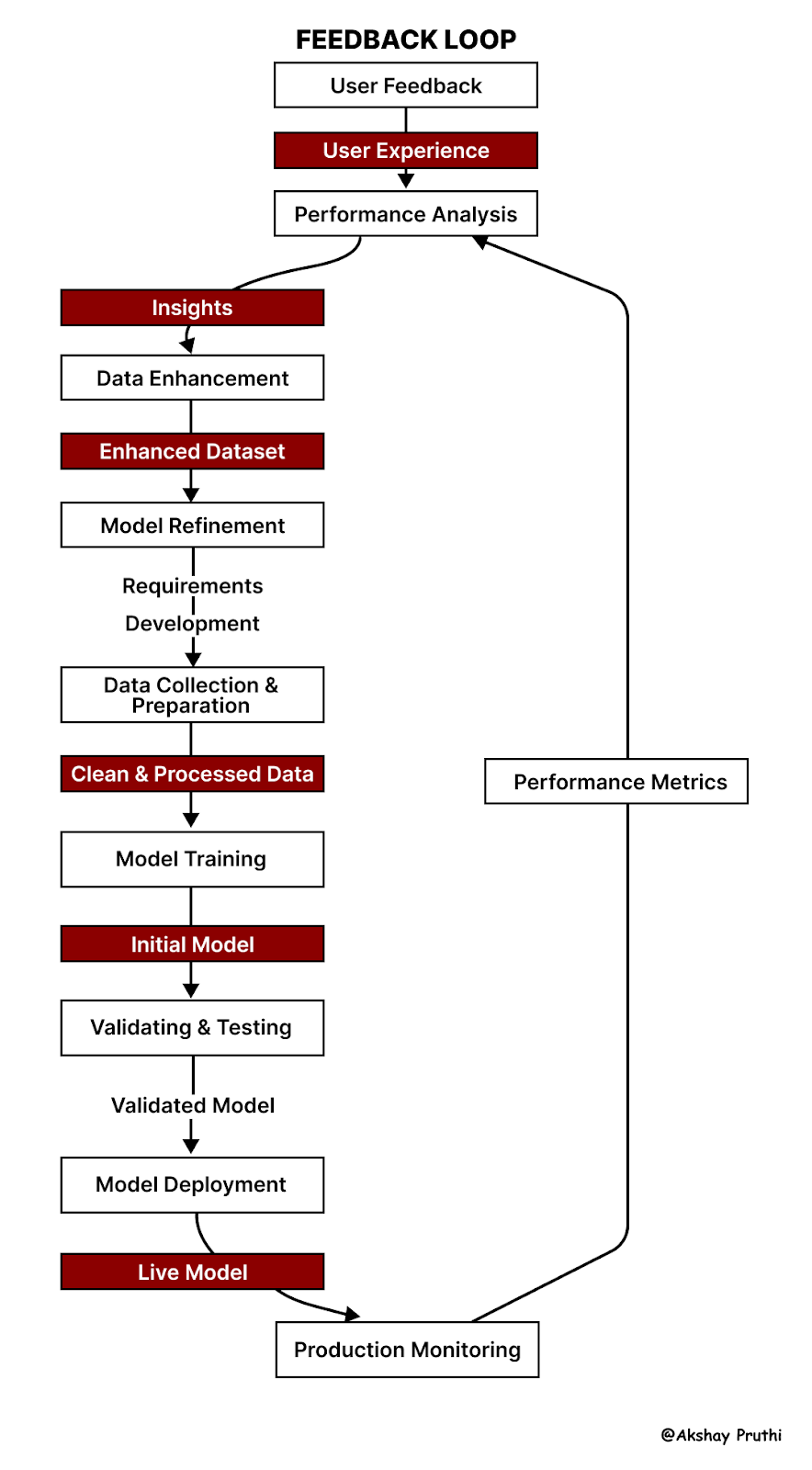

3.5 Continuous Improvement and Feedback Loops

AI models aren’t static—they require ongoing monitoring and refinement. Product managers should gather feedback from users, track model performance over time, and make adjustments based on new data. By continuously improving the model and refining explanations, you can ensure that the AI remains accurate, transparent, and relevant.

Now that we've got our pillars in place (think of them as your XAI foundation), you might be wondering: "Okay, but how do I actually start implementing this stuff?"

That's where our next section comes in handy. I've put together 50 battle-tested questions that'll help you put these pillars into practice🛠️

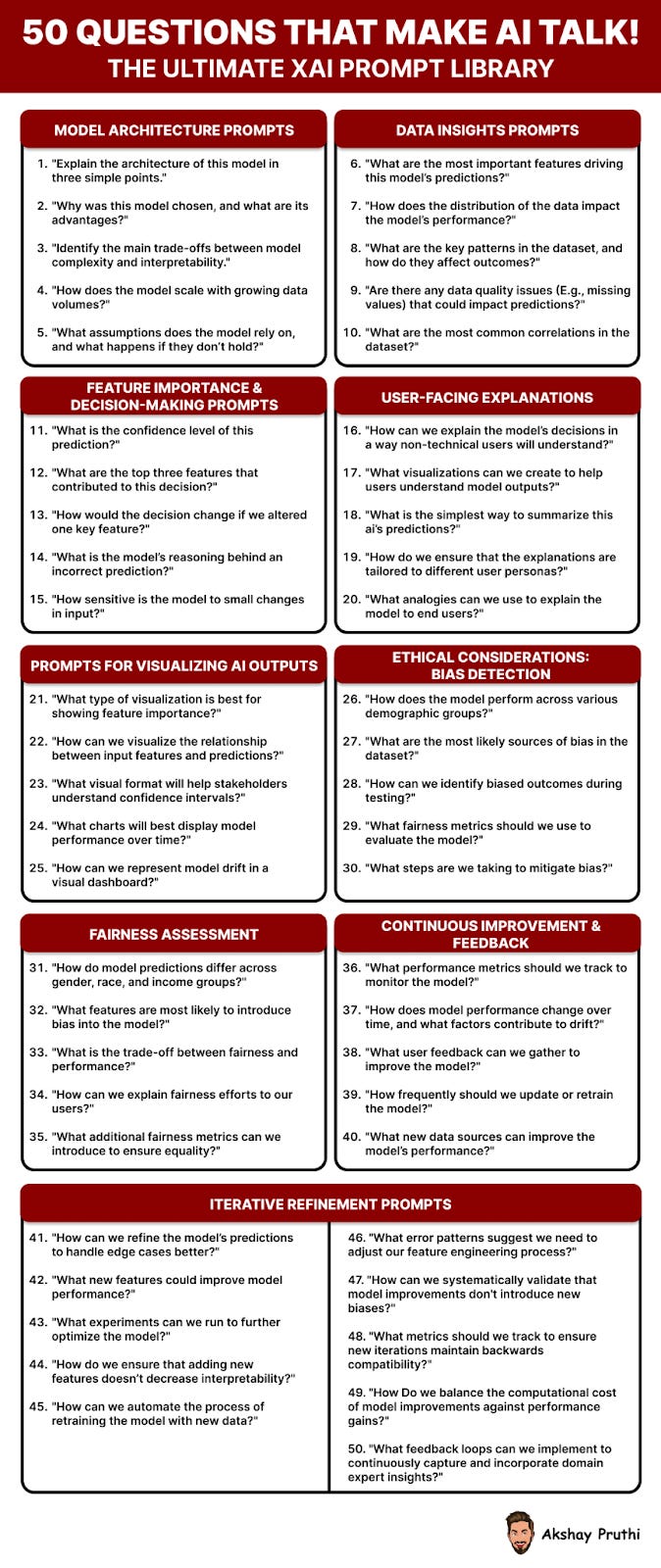

4. The Ultimate XAI Prompt Library: 50 Questions That Make AI Talk! 🗣️

Here's your comprehensive guide to asking the right questions at every stage of your XAI journey.

That's a lot of questions, right? 😅 But don't worry – you don't need to memorize all 50 right now. The key is knowing they're here when you need them.

Now that you've got all these tools in your PM toolkit, let's talk about how to put them to work. Here's your game plan for making XAI a reality in your product...

Wrapping Up

Hey, you've made it this far – congratulations! By now, you're probably thinking, "This is great, but where do I start?" Don't worry, I've got you covered.

Let's be real – implementing XAI might seem like climbing a mountain, but you don't have to reach the summit in one go. Start small, start smart.

Here's Your Game Plan:

Start With One Feature 🎯 Pick just one AI feature in your product. Maybe it's your recommendation system, or perhaps it's that risk assessment tool. Whatever it is, make it your XAI pilot project.

Use Those Prompts 📝 Remember all those questions we discussed? Pick the five that resonate most with your chosen feature. That's your starting point. No need to use all 50 at once!

Keep Learning 📚 The XAI field is evolving fast. Stay curious, keep experimenting, and don't be afraid to adjust your approach based on user feedback.

Before You Go...

Here's something I always tell my team: "AI without transparency is like a car without headlights – you might still get somewhere, but it's going to be a dangerous ride!"

Just hit the download button and get started!👇

What's Coming Next Month? 🔮

Stay tuned for our next deep dive: "Pricing Psychology - How to Optimize Your Pricing Strategy for Maximum Revenue" Trust me, you won't want to miss this one!

Got questions? Hit reply! I read every email, and I'd love to hear your XAI journey stories.

Keep building amazing things!

Cheers,

Akshay

Loved this edition! XAI is a game-changer, especially for product managers looking to build user trust. Thanks for breaking it down so clearly!